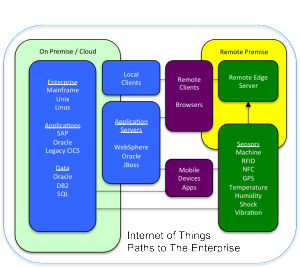

The world of enterprise IT has historically architected networks and applications in a hub-and spoke model. The reasons for this are simple: its all about the data, and the data is still kept in a glass house that is owned and managed by corporate IT. This could mean Oracle databases, SAP applications, etc. These environments take a great deal of care and feeding, must be backed up and have disaster recovery plans in place, require a unique skill set to manage, etc. If these systems are compromised in any way heads will roll and the teams responsible for the integrity of these systems play an integral role in the design the network infrastructure.

First, a brief history lesson…

Prior to the introduction of the IBM PC in the enterprise the network communications groups were fairly small and had unique skills in technologies like IBM’s Virtual Telecommunications Access Method and Systems Network Architecture. The number of external connections was minimal and generally limited to connecting with other enterprise locations that were dependent on the company “system”.

At this point in time the “Edge” of the network was an IBM 37XX series communication controller for IBM mainframes.

As the need for external communications grew the responsibility for designing and maintaining network connections fell to these same centrally located individuals. MIS teams frowned upon external network connections and made sure they kept a tight reign on things. In the early days the only possible way to “dial in” to the system was using a preconfigured PC and modem that was provided by the MIS team. They had a very limited number of “dial in” phone numbers that were shared across the company. If you tried to dial in and got a busy signal you simply tried one of the other numbers.

The Edge of the network had now extended to PC’s that were still configured and managed by MIS.

In the late 1990s the advent of the Internet Service Providers (ISP) and the “world wide web” meant IT could begin to move away from managing dial-in applications and get a handle on communications costs and scaling. When the first true web-centric application development tools that enables previously static web pages connect to back-end systems the important components such as the database and network management systems were still managed within the glass house. Even then, the first web-centric browser based applications were rolled out as Intranets that were only accessible from behind corporate firewalls. Chances are if people were in a position to pass through the corporate firewall they were still using a company supplied PC pre-configured by the IT group.

At this point the Edge of the network was the corporate firewall.

In the late 90s and early 2000s when the enterprise were broadly adopting a three tier architecture innovative vendors helped create a broad set of tools to develop and deploy scalable enterprise class web-based applications. The network groups still kept control of everything behind the firewall but the application development teams now needed to make sure their new web-based applications supported the popular browser of the day. This is especially important now that individuals were using equipment that had not been provided by the company.

The first true web browser accepted by the enterprise was Netscape Navigator. Microsoft had missed the boat with the rise of the internet and it took years for IE to become a viable alternative. In true Microsoft fashion they attempted to dominate the browser market by embedding their own “standards” and not supporting the de facto standards and technologies that had already been accepted by early web developers. Application testing now included browser testing, down to the version level, to make sure the end users understood the requirements to access the application. Ironically enough it was not uncommon for IT teams to fall back to the old trusty “sneakernet” to install “thin client” browsers across an enterprise prior to rolling out a new intranet application.

While not as cut and dry as before, the Edge of the Network was the web facing application server instances.

The first supported “mobile” applications were really thin client browsers based on the Wireless Access Protocol (WAP). WAP Microbrowsers were initially supported on a limited number of mobile devices and were essentially very thing stripped down web browsers that provided basic access to email and news headlines. Support for WAP was weak and the key application, email, was quickly dominated by the proprietary BlackBerry devices and ecosystems that had very successfully been deployed in the enterprise.

For enterprise mobile device support the Edge of the network was the Blackberry Enterprise Server.

This is where things really get interesting.

Mobile fat client hand-held computers such as those developed by Symbol Technologies and Intermec had been in place for many years before what we know today as “smart phones”. These devices communicated with enterprise applications typically through windows PCs by a limited sync process. Broader deployment of these systems required added capabilities that took the manual sync process out of the hands of end users. In most instances these applications were used in silo applications (e.g. asset management) that were not considered mission critical and almost exclusively stayed within the four walls of the enterprise.

The introduction of the iPhone in 2007, quickly followed by the android operating system in 2008, was the catalyst for consumer adoption of mobile smart phones. These devices were still dependent on the thin-client browser based model and communications was very slow (and expensive); 3G wireless networks were just being rolled out. The enterprise was still deeply committed to the Blackberry infrastructure which meant that non-blackberry smart phone access to the company email and applications was not supported.

As consumer smart phones became more ubiquitous and enterprise IT were effectively forced to support non-Blackberry devices it became obvious that the thin-client model was not going to work; the fat client smartphone app was born. This was very similar to the process the enterprise went through when going from mainframe to client server. The problems started to escalate when Sr. executives started showing up and the door of the IT group asking when their latest iThingy would be supported. The fact that they showed up with a budget helped move things along.

The old problems still began to arise: apps still needed to be tested across multiple platforms, versions of client apps needed to be managed, people still wanted to access apps through web browsers so they needed to be supported, and companies needed to develop mobile versions of web apps to limit the amount of data and improve performance on mobile devices. In time Mobile Device Management (MDM) companies appeared on the scene to provide the type of tools required to manage deployment of mobile applications in the enterprise.

At this point in time the mobile devices (limited to the legacy hand-held computers and MDM managed devices) were the edge of the network.

Whew…

In 2003 Wal-Mart issued a mandate to their suppliers that required cases and pallets of products shipped to Wal-Mart have a passive RFID tag affixed that met industry standards being developed at the time. As an early player in the RFID industry I saw a great deal of thrashing among companies trying to bridge the gap between RFID readers and the enterprise. Many of the “solutions” put forth were little more than a collection of batch data synchronization tools that were often referred to as “platforms”. However, when companies like Wal-Mart are driving the RFID industry to support enterprise class near real-time visibility of supply chain operations then those “platforms” were not going to cut it.

The challenge for customers was that they had dozens, and in a few cases hundreds, of fixed and hand-held RFID readers spitting out reams of data with no real way to parse and sort the data before sending it back to the enterprise. A limited set up companies began using traditional Java applications server technology and developed interfaces to select RFID readers (typically Windows OS or Linux devices). This still presented a different set of challenges since many of the RFID projects were pilot projects driven by customer mandates and support from enterprise IT was not forthcoming. To further complicate things many of these customer organizations had already standardized on a specific applications server platform (e.g. IBM WebSphere) and the responsibility to get in line with the corporate standards fell on to the platform vendor. With a very few limited exceptions the solutions that eventually were developed were silo applications that at best were loosely coupled with back end enterprise systems.

A few visionary companies saw this as an opportunity and started developing network appliances, essentially linux boxes with pre-configured software installed, specifically designed to manage broad deployments of auto-id devices including RFID readers and manipulate and parse data from those devices before shipping the data to the enterprise. These appliances could also communicate peer-to-peer and be configured to share workloads and support automated load balancing and fail-over. In my experience these devices were the first “physical” edge servers. They were also very expensive and provided much more capability than companies needed or were willing to pay for. Unfortunately some of these companies took too much money from investors too early in the market and ran out of cash before the market was ready for the solution they were offering.

At approximately the same time I came across a company that was developing an open source RFID middleware solution called RFIDi. They were also providing a software as hardware option of the RFIDi server on a very low cost linux appliance. While the deployments of these systems are still fairly rare this type of edge server appliance based architecture has been well received.

At this point the potential exists for Edge of the network to be an edge server appliance managing peripheral devices (e.g. RFID readers) and sensors.

If you buy into the fact that the value of IoT in the enterprise lives at the “Edge” (I certainly do) then you also recognize that the edge can be quite a mess. If you consider that you have smartphones, sensors, proprietary M2M solutions, device controllers, switches, power systems, infrastructure installations, etc., none of which was designed to work together, you have a tangled mess of highly capable devices that are incapable of communicating with one another. Furthermore, the traditional legacy enterprise hub & spoke architecture simply will not work if an organization is to get the true value of IoT. These devices must be able to communicate peer-to-peer and operate asynchronously with the enterprise through multiple communications methods and one or more cloud instances. They must support forward recovery similar to enterprise transaction systems and message queuing solutions and seamlessly plug-in to enterprise environments.

In a strange way it appears as if everything has come full circle. The legacy on premise “glass house” model is slowly moving toward “the cloud.” Instead of business unit specific purchased applications maintained by IT we now have cloud based subscription software licensing models. We have gone from thin client terminals which require very little support resources from IT , to fat client PC’s, to three-tier architectures with thin client browsers, to thin client mobile devices, to fat client consumer mobile devices…which interestingly enough require very little support from IT. All the while what was seen as the “edge” of the network has morphed in response to the needs of users with an eye on enterprise stability and security.

And the winner is…

In my option the best near term architect to support IoT in the enterprise would consist of software as hardware edge server appliances that communicate with either on premise or cloud based applications that offer the enterprise class scalability, fail-over, fault tolerance, and standards based integration modules. These devices would need to manage a multitude of device types and also be able to communicate peer-to-peer and support a variety of communication methods including ethernet, wifi, bluetooth, cellular, and satellite, and dynamically and securely switch based on availability and pre-defined business rules. These devices must support standard runtime environments (e.g. Java) for hosting edge centric applications (e.g. analytics) and support deployment in a way that enterprise developers can use existing tools and methodologies. Most importantly is that they must be easy to deploy.

As someone with a long history in enterprise IT, both as an early techie and later sales/marketing/biz dev guy, I find this space particularly interesting. I have stumbled upon a few select vendors from outside the traditional IT market that seem to be very well positioned to deliver exactly this type of solution. I’ll be digging into this a bit more and let you know what I find…stay tuned.

For the past several months I have been engaging with Enterprise IT executives talking about the “Internet of Things”. In many cases the eye rolls start before I’m through the first sentence. As the conversation continues they “get it” but still see IoT as more hype than substance and it is not currently on their radar screen. Their primary challenges include things like managing smart mobile devices, cloud computing, and converged networks. “Yes”, I say, “That’s IoT”, but I can tell they are still thinking about Fitbits and iPhones and even perhaps recalling the RFID pilot project they were considering a few years ago. Hmmm!

For the past several months I have been engaging with Enterprise IT executives talking about the “Internet of Things”. In many cases the eye rolls start before I’m through the first sentence. As the conversation continues they “get it” but still see IoT as more hype than substance and it is not currently on their radar screen. Their primary challenges include things like managing smart mobile devices, cloud computing, and converged networks. “Yes”, I say, “That’s IoT”, but I can tell they are still thinking about Fitbits and iPhones and even perhaps recalling the RFID pilot project they were considering a few years ago. Hmmm!